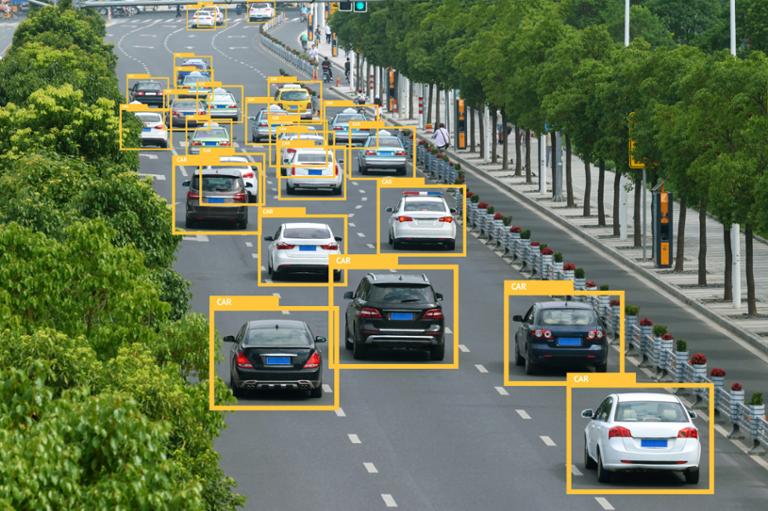

For those tech pros interested in cybersecurity, artificial intelligence (A.I.) and machine learning represent the next great frontier of danger and opportunity. In theory, A.I. platforms will harden network defenses—but they could also provide new ways to hack systems. If you’re a paranoid sysadmin or cybersecurity expert, it’s easy to picture a nightmare scenario in which an ultra-sophisticated A.I. right out of a science-fiction movie plows through your network defenses, destroying or corrupting systems. But the humans tasked with network defense may soon have a powerful ally: A.I. designed to prevent A.I.-enhanced hacks. Google Brain, a research project within Google dedicated to the advancement of deep learning and A.I., is hosting a contest on Kaggle, a platform for data-science competitions. The goal: have A.I. researchers design attacks and defenses on machine-learning systems that recognize and react to images. Specifically, Google Brain wants tech pros to design two kinds of attacks: a “Non-Targeted Adversarial Attack” that slightly modifies a source image to the point where a machine-learning platform classifies it incorrectly, and a “Targeted Adversarial Attack,” in which that modified image is classified in a specific way. It also wants pros to come up with a defense (“Defense Against Adversarial Attack”) against those attacks. What’s so key about modifying source images? If an A.I. platform regards a modified image as “true,” it may draw incorrect conclusions. For example, if a hacker trains an autonomous-driving platform to mischaracterize red lights as green, it could lead to unmitigated chaos. (Last year, Popular Science had an extensive article detailing how the use of modified images can corrupt even a sophisticated deep-learning network.) A research note posted to OpenAI frames these “adversarial images” as the equivalent of optical illusions, or hallucinations. Whatever the analogy, it’s clear that such attacks are a major issue, considering the number of tech firms interested in leveraging A.I. for mission-critical apps and functions. Developing A.I. systems capable of thwarting these (and other) attacks could prove vital. If this sort of thing interests you, check out Kaggle’s contest. And if you’re breaking into cybersecurity as a profession, be prepared to discuss how A.I. might change the security landscape in coming years—and what companies are doing to counter a new breed of threats.